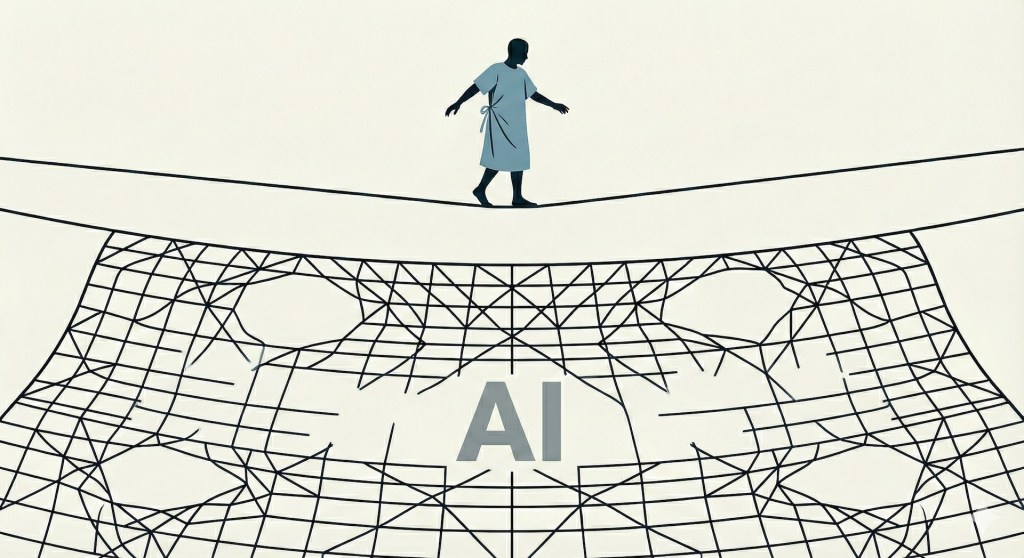

A new study stress-tested medical AI on real-world clinical decision-making and asked a simple question:

Can we make it safe just by cranking up the “be careful” dial?

The answer: No.

When the models were tuned to be more cautious, they didn’t stop making mistakes—they just traded them: fewer loud, obvious errors, more quiet, easy-to-miss ones.

The real punch line:

Clinical AI safety isn’t a vibes slider you set to “cautious”; it’s a whole system problem—who uses it, how it’s checked, and what guardrails sit around an inherently unreliable but powerful tool.

Leave a comment